The global software-as-a-service (SaaS) industry is currently undergoing its most significant transformation since the transition from on-premises hosting to the cloud. By the end of 2025, the artificial intelligence (AI) segment of the SaaS market is projected to reach approximately $126 billion, serving as the primary engine for a broader $300 billion SaaS ecosystem. This era, often described by industry analysts as “First Light,” represents a shift from the initial chaotic “Big Bang” of generative AI toward a structured environment defined by foundational companies, established build patterns, and rigorous success metrics. As organizations move past the experimentation phase, the criteria for classifying AI SaaS products have evolved from simple functional labels into a complex, multidimensional taxonomy that accounts for architectural integrity, autonomous capability, unit economics, and regulatory compliance.

The strategic necessity of this classification framework cannot be overstated. In a market where 90% of AI startups are projected to fail by 2026 due to unsustainable economics or a lack of competitive moats, the ability to distinguish between genuine AI-native innovations and superficial “thin wrappers” has become the primary duty of investors and enterprise buyers. Furthermore, as SaaS giants integrate agentic layers into their legacy systems, the divide between “AI-enhanced” incumbents and “AI-first” challengers is creating a new competitive dynamic that demands precise categorization to ensure long-term operational stability.

Foundations of Functional and Technical Categorization

The initial filter for any AI SaaS product remains its core functionality—the specific problem it purports to solve and the mechanism through which it delivers value. However, in 2025, this classification has deepened to include the “intelligence level” and the underlying technical stack, which dictate the product’s reliability and scalability.

Intelligence Levels and Model Architectures

Classification begins with the technological engine. While generative models have dominated the public discourse, the enterprise market relies on a broader spectrum of AI types, often utilized in hybrid configurations to balance creativity with deterministic accuracy.

| AI Classification Type | Core Mechanism | Enterprise Application Suitability |

| Rule-Based / Traditional AI | Predefined algorithms and IF-THEN logic. | Tasks requiring 100% consistency, auditability, and transparency. |

| Predictive AI | Statistical methods, regression, and time series analysis. | Financial forecasting, churn prediction, and supply chain optimization. |

| Generative AI | Transformers, Diffusion models, and GANs. | Content creation, code generation, and synthetic data augmentation. |

| Conversational AI | NLP, dialogue management, and context-aware LLMs. | Customer support, real-time engagement, and personalized virtual assistance. |

| Agentic AI | Autonomous planning, tool use, and state tracking. | Complex multi-step workflows, autonomous SDRs, and self-healing systems. |

The nuance in this classification lies in the “explainability” of the output. Predictive AI is generally more explainable because its results are grounded in historical statistics and numerical patterns. In contrast, many generative models lack transparency in their decision-making processes, leading to a classification tier that requires higher levels of governance and “evals” (evaluation frameworks) for enterprise adoption.

The Technical Stack as a Growth Signal

Investors increasingly classify AI SaaS products based on their development frameworks, as the choice of stack influences everything from research flexibility to production stability. In 2026, the dominance of PyTorch and TensorFlow remains a central theme, though their roles are clearly bifurcated. PyTorch, which claimed over 55% of the production share by Q3 2025, is classified as the preferred framework for rapid experimentation and NLP tasks due to its dynamic computation graphs and deep integration with Hugging Face. Conversely, TensorFlow is the standard for battle-tested, high-volume production environments where mobile deployment (via TensorFlow Lite) and operational maturity are prioritized over research speed.

A third category, JAX, has emerged for teams where computational performance on large-scale training workloads justifies a steeper learning curve. For organizations focused on traditional structured data problems—such as fraud detection or customer churn—Scikit-learn remains the essential classification for “traditional machine learning,” often outperforming deep learning models in CPU-only environments where GPU costs would be prohibitive.

Architectural Integrity: AI-Native vs. AI-Enhanced

One of the most critical classification divides in 2026 is the distinction between AI-native and AI-enhanced (or AI-embedded) architectures. This classification determines not just how a product functions today, but its ability to adapt to a rapidly shifting technological landscape.

Defining the AI-Native Standard

An AI-native product is defined as a solution created from the ground up with artificial intelligence as its foundational component. The architecture, user experience, and value proposition are so deeply intertwined with AI that removing the models would cause the product to cease functioning. These systems are designed around models and data pipelines rather than static code and fixed workflows.

The financial performance of AI-native companies provides a compelling case for this classification. Data indicates that AI-native startups reach $5 million in ARR significantly faster—averaging 25 months compared to 35 months for their non-native peers. Furthermore, these companies are achieving unprecedented efficiency levels, with some “Supernova” startups demonstrating an ARR of $1.13 million per full-time employee (FTE), roughly 4-5 times higher than traditional SaaS benchmarks.

The AI-Enhanced Incumbent Model

AI-enhanced products represent the “incumbent’s response” to the AI revolution. These are established platforms—such as legacy CRMs or project management tools—that bolt AI features onto an existing traditional stack. While these features, like an AI-powered meeting note writer, provide immediate value, the core architecture remains a system of record where the user must still navigate dashboards and manually initiate workflows.

A significant issue within this classification is “cannibalization risk.” Because incumbent revenue often depends on seat-based pricing, true automation (which reduces the need for human “seats”) threatens their existing business model. Consequently, many AI-enhanced products are classified by their “multi-layered pricing” structures, where AI is sold as a premium add-on or a separate tier to protect traditional recurring revenue.

Market Alignment: The “Riches in Niches” Paradox

The classification of AI SaaS into horizontal and vertical models has reached a point of maturity where the strategic trade-offs are well-documented. Horizontal AI refers to universal solutions designed to perform common computational tasks—such as predictive analytics or natural language processing—that can be adapted across diverse industries. Examples include general-purpose platforms like OpenAI, Claude, or HubSpot.

Vertical AI, by contrast, involves specialized systems designed deeply for a single industry or specific business function, such as healthcare diagnostics, legal review, or financial compliance. The “Verticalization” trend is a dominant force in 2026, as precision-focused platforms offer specialized depth that generic models cannot match.

| Trait | Vertical AI SaaS | Horizontal AI SaaS |

| Target Market | One niche industry (e.g., Legal, Healthcare). | Multiple industries and broad functions. |

| Data Training | Massive amounts of industry-specific data. | Broad, diverse datasets. |

| Customization | High; tailored to specific regulatory nuances. | Moderate; versatile but lacks specialized depth. |

| Sales Strategy | “Land and Expand” with deep domain understanding. | High sales efficiency across a broad market. |

| Integration | Deeply integrated with industry platforms (e.g., EPIC, SAP). | API-first; connects quickly to standard tools (e.g., Slack). |

The “Riches in Niches” philosophy suggests that while Vertical AI has a smaller total addressable market (TAM) than horizontal tools, it builds significantly higher defensive moats due to the complexity of digitizing industry-specific functions and navigating government regulations like HIPAA or GDPR.

Agentic AI: Autonomy and Orchestration Levels

The emergence of agentic systems represents the most significant shift in product classification since 2023. Unlike early AI tools that required human prompting (Co-pilots), agentic AI autonomously detects signals, makes decisions, and executes actions (Auto-pilots).

The Four Levels of Agentic Maturity

To assist organizations in classifying their autonomous capabilities, a maturity framework has been established that measures the proportion of revenue-generating or operational activities handled without human intervention.

- Level 1 – AI-Assisted Tasks: AI acts as a productivity aid (e.g., email drafting, note-taking). Humans retain full ownership of the workflow coordination and timing.

- Level 2 – Partial Agent Workflows: Agents automate discrete segments, such as inbound lead enrichment, but operate alongside manual systems where handoffs are still human-managed.

- Level 3 – Agent-Led Execution: Agents own complete workflows end-to-end within defined domains. Prospecting or support ticket resolution is autonomous, with humans intervening only for final negotiations or escalations.

- Level 4 – Fully Orchestrated Systems: Multiple agents operate as a coordinated ecosystem across the full lifecycle. They share signals, dynamically adjust priorities, and optimize execution without human supervision.

Orchestration vs. Automation

A critical distinction in this classification is the shift from “automation” to “orchestration.” Most companies stall after building a handful of useful agents in isolation—one for pricing, one for fulfillment. The real breakthrough occurs with the implementation of a “conductor agent” that coordinates decisions across these functions. Without orchestration, organizations risk scaling “silos at machine speed,” where one agent’s actions may conflict with another’s.

This has led to the development of the “Model Context Protocol” (MCP), an open standard for connecting models to tools and data, and “Agent2Agent” (A2A) protocols for secure inter-agent communication. These protocols are now core criteria for classifying “enterprise-ready” agentic systems.

The Economic Reckoning: Unit Economics and Pricing Evolution

Perhaps the most disruptive change in AI SaaS classification involves the fundamental restructuring of cost foundations and monetization strategies. In 2026, pricing is no longer just a line item; it is a strategic lever that defines the growth trajectory of the business.

Shift from Access to Outcomes

Traditional SaaS was built on the “seat-based” model, selling access to a platform. However, because AI can now replace the very humans who would occupy those seats, companies are shifting toward “output-based” or “success-based” pricing.

- Output-Based Models: Pricing is tied to the “work done,” such as Intercom charging for resolved customer issues rather than the number of support agents.

- Token and Credit Systems: A resurgence in token-based pricing allows providers to manage the high variable costs of inference.

- Success-Based Metrics: In industries like fintech, companies like Chargeflow take a percentage of successfully recovered chargebacks, aligning the provider’s revenue directly with the customer’s ROI.

The Marginal Cost Problem

Unlike traditional software, where marginal unit costs were nearly zero, AI SaaS incurs significant per-unit costs for computing power, regardless of whether a proprietary model or an open-source solution is used. This has introduced a “Cost-based homework” requirement for finance teams, who must now quantify and restructure pricing policies to ensure long-term viability.

This economic reality has bifurcated the market into “AI Supernovas” and “AI Shooting Stars.” Supernovas prioritize rapid distribution and often operate at low gross margins (~25%) in their early stages, trading profit for market share. Shooting Stars maintain healthier margins (~60%) and grow at rates that feel more anchored to traditional organizational bottlenecks.

| Growth Classification | ARR Year 1 | ARR Year 2 | Gross Margin | ARR / FTE |

| AI Supernova | ~$40M | ~$125M | ~25% | ~$1.13M |

| AI Shooting Star | ~$3M | Quadrupling YoY | ~60% | ~$164K |

Note: Data based on Bessemer Venture Partners’ “State of AI 2025” survey of top-performing startups.

Security, Governance, and the “Legal Math” of 2026

As AI moves into the core of enterprise operations, classification criteria have expanded to include rigorous standards for data privacy, sovereignty, and legal compliance. The concept of “Zero Trust” has moved from the network layer to the agent layer, where every AI agent is treated as a “first-class identity” and governed with the same rigor as human identities.

Agentic Governance and Sovereignty

“Sovereign AI” has emerged as a major classification for products that meet specific national or regional information security standards, such as FedRAMP in the U.S. or the VSA in Germany. This involves not only where the data is stored but also the provenance of the physical assets and intellectual property used to build the models.

Furthermore, “Intent-driven ERP” and “Generative UI” are emerging as new categories where the software itself continuously learns and adapts to user intent, rather than following static, deterministic rules. These systems require a “continuously learning intelligence layer” grounded on semantically rich knowledge graphs to remain dependable.

The $3,000 “Legal Math” Baseline

Following landmark legal settlements in 2025, venture capitalists and enterprise buyers now perform intensive “Data Provenance” audits. The $1.5 billion Anthropic settlement established a “legal math” baseline of approximately $3,000 per copyrighted work used in training. This figure represents the new “floor price” for content licensing and is used by investors to calculate potential hidden liabilities during Series A due diligence.

Investors now require a “Data Governance Package” that includes:

- A complete inventory of every training dataset by source.

- Clear documentation of acquisition methods (licensed, scraped, or synthetic).

- Terms of Service (ToS) analysis confirming compliance for any scraped data.

- Legal memos justifying “Fair Use” based on transformative purposes.

The absence of this prepared documentation can extend fundraising timelines by 60+ days, as VCs force retroactive verification.

Critical Issues and Failure Modes in Classification

The rapid expansion of the AI SaaS market has revealed several “cracks” in the classification process that pose existential threats to startups and enterprises alike.

The “Thin Wrapper” Trap

The most common cause of failure in 2025–2026 is the “thin wrapper” problem. These are startups that build attractive user interfaces on top of third-party APIs from labs like OpenAI or Google without adding a proprietary “data moat” or deep workflow integration. These businesses have no defensible moat; a single platform update from an API provider can render their entire product obsolete overnight.

| Failure Mode | Description | Impact |

| Thin Wrapper | Rented technology with no proprietary AI or workflow depth. | 90% projected failure rate by 2026. |

| Agent Debt | Adopting autonomous agents faster than they can be governed. | Fragmented silos and untraceable liabilities. |

| Retention Apocalypse | High churn rates due to low switching costs and “experimentation fatigue”. | Existential threat to long-term valuation. |

| Sunk Cost Engineering | Spending 12+ months on “architectural purity” instead of shipping MVPs. | Competitors capture the market with simpler, faster iterations. |

The Retention Apocalypse and Agent Debt

Industry experts have warned of a “Gross Retention Apocalypse” in 2026. While net retention rates (NRR) may look healthy due to fast-growing segments, logo churn is proving to be a lagging metric of lost customer trust. Companies are increasingly classified by their ability to deliver “expeditious value”—products that can demonstrate a 10x improvement over manual methods in the first session or within the first quarter of deployment.

“Agent debt” is another emerging classification issue. Organizations that deploy agents without central registries for prompts and decision logs find themselves with multiple, conflicting “versions of reality”. For example, one region’s refund agent might auto-refund all requests while another escalates them, with neither behavior being transparent to leadership.

Solutions and Implementation Strategies for Stakeholders

To successfully navigate this landscape, both developers and buyers must adopt structured frameworks for evaluation and operationalization.

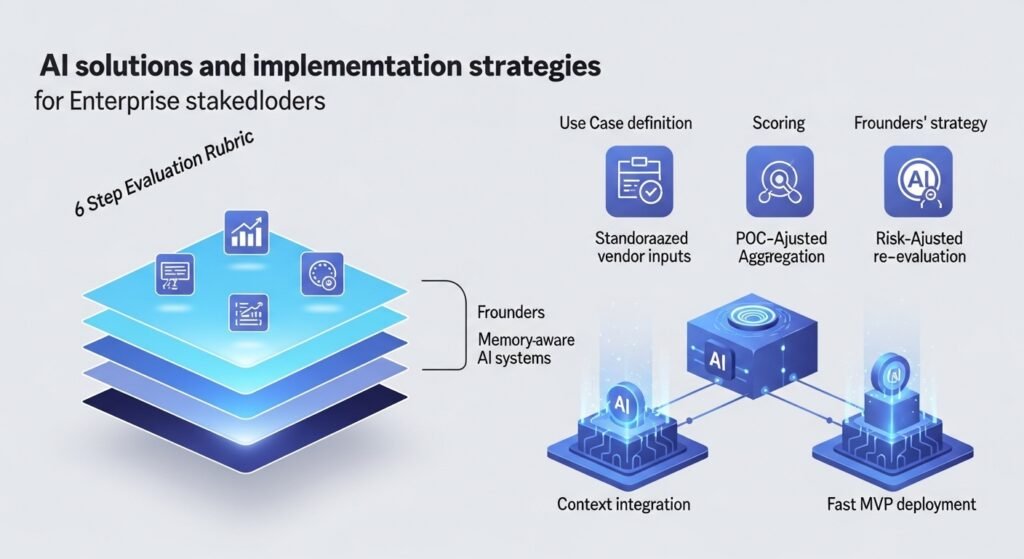

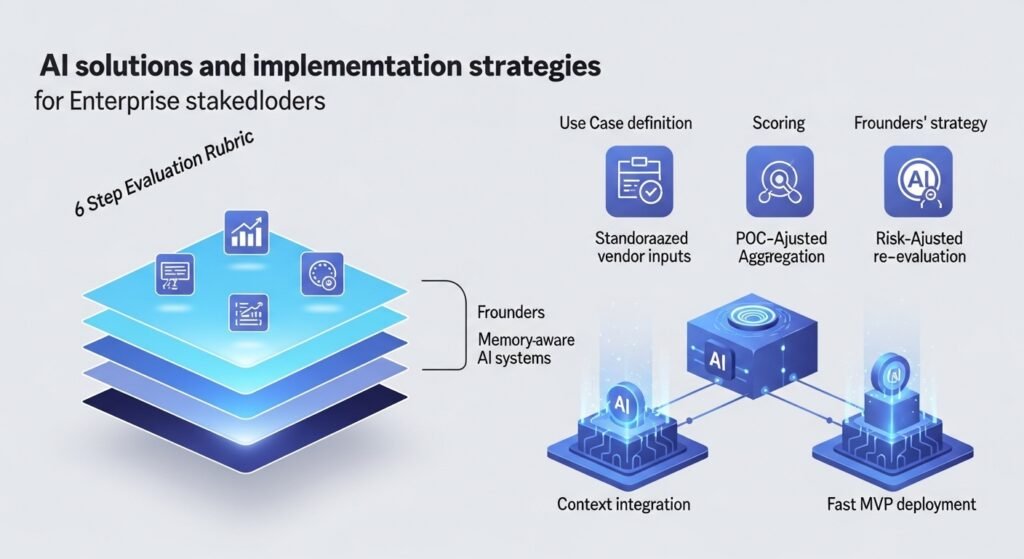

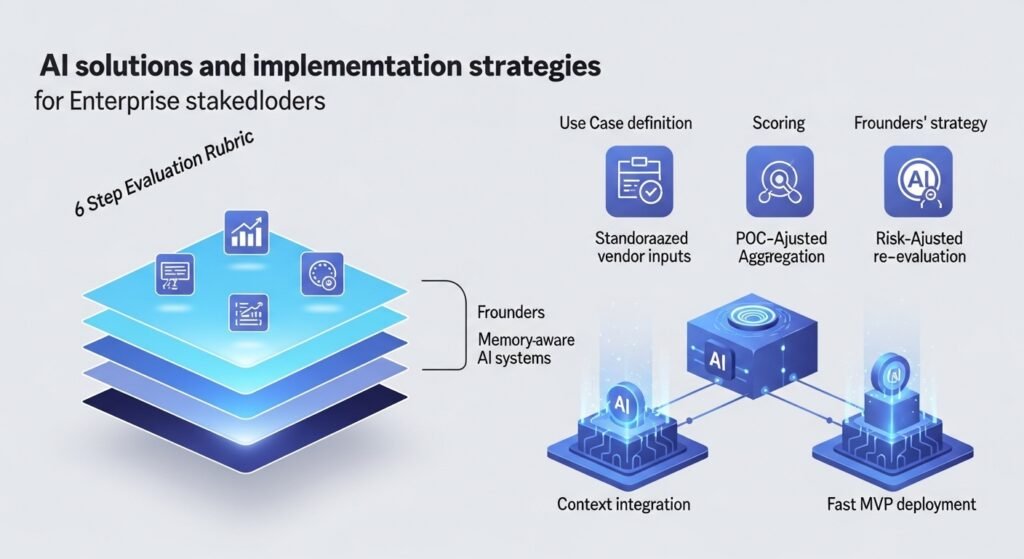

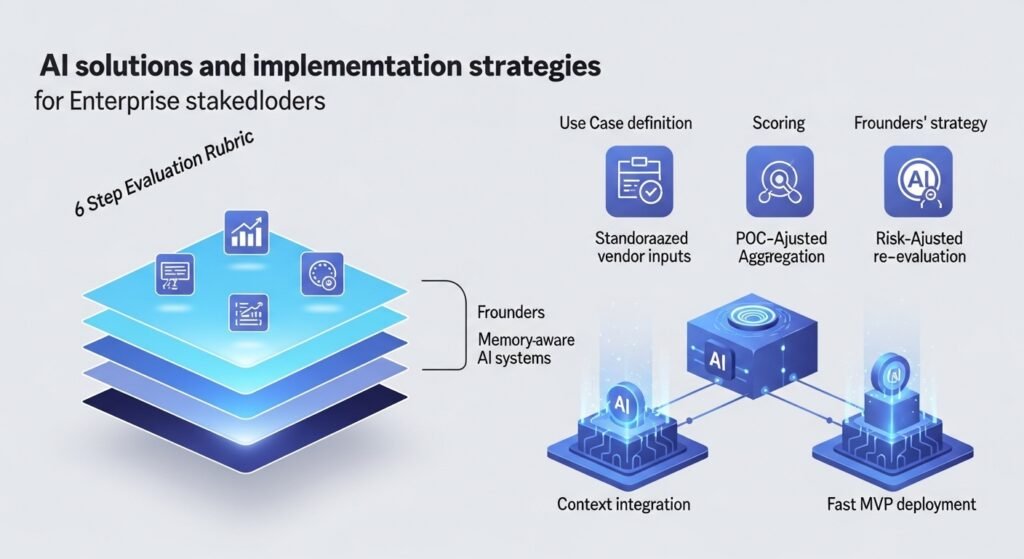

A Six-Step Evaluation Rubric for Enterprise Buyers

Enterprise procurement teams are advised to move beyond “dazzling demos” and apply a quantitative scoring rubric across all classification dimensions.

- Define Use Cases and Weights: Precisely define business challenges and assign weights to criteria like data policy, interpretability, and risk endurance.

- Standardized Vendor Inputs: Use a standardized questionnaire to obtain technical, security, and commercial evidence, including architecture diagrams and model documentation.

- Apply a 0-5 Scoring Scale: Assess each dimension; any score below a minimum acceptable threshold should be identified as a “deal-breaker”.

- Shortlist and Validate via POC: Conduct a focused Proof of Concept (POC) to validate that the product achieves results in alignment with company KPIs.

- Aggregate Risk-Adjusted Scores: Combine performance scores with risk indicators to facilitate a balanced selection process.

- Periodic Re-Evaluation: Transform evaluation results into contracts and SLAs, then reapply the matrix periodically to reflect model updates or changes in business needs.

The Founder’s Strategy: Building a Defensible Moat

For AI SaaS founders, the path to longevity involves moving beyond “AI for AI’s sake” and identifying painful problems where AI genuinely provides a 10x improvement. Strategic priorities for 2026 include:

- Context and Memory as Moats: Building flexible, memory-aware systems with low-latency recall that turn context into a compounding advantage over time.

- Deep Integration: Embedding AI into truly core workflows where switching costs are high—often requiring integration with “messy, unstructured data” locked in legacy systems.

- Scrappy Velocity over Architectural Purity: High-performing teams focus on stitching together APIs and getting MVPs in front of users in two weeks to gather feedback, rather than spending months on rigid architectures.

Conclusion: The Convergence of Capability and Constraint

The landscape of AI SaaS product classification in 2025–2026 reflects a maturation of the field from technological curiosity to enterprise necessity. The central claim is that the current arc is “capability-rich yet autonomy-limited”. While models have become increasingly effective within well-instrumented domains, their open-world action remains constrained by compounding errors, prompt-injection risks, and increasing demands for accountability.

Successful classification now requires a synthesis of technical architecture (Native vs. Enhanced), market positioning (Horizontal vs. Vertical), and agentic maturity (Levels 1-4). As the “fog of the early calamity” lifts, the products that will define the $3.5 trillion market of 2033 are those that prioritize “system discipline”—evaluating progress not through benchmarks alone, but through the seamless integration of intelligence, governance, and measurable economic value. Organizations that master this taxonomy will be well-positioned to avoid the “agent debt” of the past and lead the next wave of digital transformation.

FAQ’S

AI SaaS is projected at $126B by 2025, within a $300B SaaS ecosystem.

AI-native: Built with AI at its core.

AI-enhanced: AI added to legacy systems.

Horizontal: Works across industries.

Vertical: Specialized for one industry, stronger moats.

AI-assisted tasks

Partial agent workflows

Agent-led execution

Fully orchestrated systems

Automation runs tasks; orchestration coordinates multiple agents dynamically.

Baseline $3,000 per copyrighted work for training data audits.